“Unraveling the Future: Deep Learning-Driven De-Homogenization in Smart Textile Fabrication“

Greetings, textile aficionados and tech enthusiasts! Today, we’re delving into a fascinating intersection of deep learning and de-homogenization with a detailed look at a GitHub repository by elingaard — deep-dehom. Although this might seem like it belongs to the domain of pure data science, there’s a lot we can uncover about the future of smart textiles from this innovative project. Buckle up as we embark on this rich journey of tech wonders and explore how cutting-edge technologies are woven into the fabric of modern advancements.

Introduction to De-homogenization Using Convolutional Neural Networks

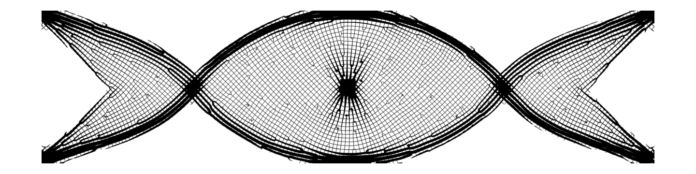

Our journey starts with understanding the core premise of de-homogenization, a critical process in material science and engineering. The GitHub repository in question houses the code and instructions to replicate the experiments from the paper “De-homogenization using Convolutional Neural Networks.” Essentially, de-homogenization refers to the method of reversing the homogenization process, allowing us to recover the detailed structural composition of materials from their averaged properties.

For textiles, this can signify major leaps in customized fabric production, structural textile design, and the development of smart textiles that respond dynamically to various stimuli.

Getting Started

Setting up the project requires some preliminary installations, largely indicating Python-based environments. You’ll need Python 3.8 and a list of Python packages which can be swiftly installed via Conda and pip, ensuring you have the necessary computational libraries to execute the neural network models.

“`bash conda create -n deepdehom python=3.8 conda activate deepdehom pip3 install -r requirements.txt “`

This establishes your foundational environment where the magic really starts to happen. You are now primed to delve into the dataset generation, model training, and the execution of the pre-trained models provided.

Dataset Generation

An intriguing aspect here is generating synthetic data to train the models. By crafting these datasets, you cultivate a sandbox for the neural network to learn and improve. For those unfamiliar, synthetic data is not gathered from real-world observations but rather created via algorithms to simulate the properties of real-world data.

“`bash python3 data_sampler.py –savepath “path/to/data/train” –n_samples 10000 python3 data_sampler.py –savepath “path/to/data/test” –n_samples 1000 “`

With these commands, you’re generating training and testing datasets which are crucial in training and validating the neural network. This step is akin to weaving high-quality yarns before plunging into the loom to craft a masterpiece.

Training the Model

Training the neural network model involves two distinct stages according to the weight factors discussed in the paper. Initially, the forking loss is disabled, followed by the disabling of frequency loss in the subsequent stage.

“`bash # Step 1 python3 main.py “path/to/data” –lambda_orient 1.0 –lambda_freq 1.0 –lambda_tv 1.0 –lambda_fork 0.0

# Step 2 python3 main.py “path/to/data” –pretrained “path/to/step1_model/” –lambda_orient 1.0 –lambda_freq 0.0 –lambda_tv 1.0 –lambda_fork 2.0 “`

Diving Deeper into Keywords

Forking Loss and Frequency Loss

These two terms might sound arcane but hold significant relevance in the training process.

Forking Loss** refers to a penalty used during model training to prevent the model from diverging too much from the desired path. **Frequency Loss**, on the other hand, helps the model maintain the intended frequency characteristics of the data, ensuring the patterns identified are harmonized with expected outcomes.

Jupyter Notebooks in Textile Research

One noteworthy tool highlighted is the Jupyter Notebook. This open-source web application allows you to create and share documents that contain live code, equations, visualizations, and narrative text. In the context of textile research, Jupyter Notebooks empower textile scientists to document processes, experiment with new algorithms, and visualize data in a cohesive, interactive manner.

Running Pre-Trained Models

To make your experimentation easier, a pre-trained model is made available in a Jupyter Notebook `lam_width_projection.ipynb`. This offers a practical starting point to understand how de-homogenizing your designs can lead to new innovations in textile technology.

Future of Smart Textiles

Diving into such a repository sets the stage to marvel at the endless possibilities smart textiles hold. Imagine hyper-responsive fabric that adjusts its insulative properties based on the ambient temperature, dynamically changing weave patterns for optimal performance. This future isn’t far-flung; it’s rapidly coming into focus with each technological advancement.

Additional Applications

In addition to these optimized fabric structures, another essential facet is

topology optimization**. Generally, this refers to determining the best material layout within a given design space for a set of loads and boundary conditions. This principle, applied to textiles, can result in products that are not only lighter and stronger but also consume less raw material, promoting sustainability.

Adopting Technological Innovations

In the grand tapestry of textile innovation, adopting such advanced technologies underscores a paradigm shift. The fusion of deep learning and textile manufacturing propels industries into a new age where data-driven design meets textile craftsmanship. The applications are vast, covering everything from fashion and military gear to medical textiles that actively monitor health vitals.

Enhanced Security and Open Source Collaboration

The repository also emphasizes security measures and collaborative tools such as GitHub Actions, Codespaces, and GitHub Copilot, which bolster the development process by automating workflows, providing development environments instantly, and even aiding in writing better code with AI assistance.

Conclusion

Navigating through elingaard’s deep-dehom repository isn’t just a lesson in advanced data science—it’s a peep into the future of textile engineering. With deep learning algorithms set to revolutionize de-homogenization, textile researchers and manufacturers are bestowed with unparalleled tools to weave the next generation of smart fabrics.

By leveraging these technological marvels, the textile industry stands on the cusp of unprecedented innovation, paving the way for fabrics that are smarter, more adaptive, and intrinsically attuned to the needs and complexities of the modern world.

So there you have it! We’ve woven through the entire tapestry of de-homogenization using convolutional neural networks, hoping this journey sparks a revelation and a newfound reverence for the tech-imbued lexicon of today’s textile innovations. Continue exploring, experimenting, and who knows, the next smart textile breakthrough might just spring from your loom of creativity and understanding.

Keep fabricating excellence, and stay tuned for more deep dives into the world where textiles meet technology!

Keywords: De-homogenization, Convolutional Neural Networks, Smart Textiles (Post number: 24), Textile Innovation, Dataset Generation